In this post I want to talk about fidelity, meaning the resolution we give to our product prototypes. Marty Cagan talks a lot about the different types of prototypes that can and should be used during discovery. A prototype should only just be good enough (i.e. have high enough fidelity) to verify an idea. We don’t want to spend any more time making it than necessary, because in the end we will throw it away once we build the real product.

To understand if a feature will be valuable and usable, the designers can create wireframes/mock-ups/prototypes in a tool like Figma. It allows the designers to create realistic screens and also run simulations of the real app (just the flows, no data). The risk is that the designers go all-in, creating high-fidelity prototypes that the developers must treat essentially as a requirements document, with all the difficulties that that entails:

- The developers have to build in increments (screen-by-screen), rather than being able to deliver iteratively (starting with a simple story and elaborating).

- Changes to the design means that a large prototype has to be frequently updated, requiring the team to spend time figuring out how to maintain the prototype rather than spending time collaborating on delivery of the next story.

Also, the more waterfall the process is, the more overloaded the prototype becomes with all the information the developers need to build the product. The result is a very high-fidelity prototype that actually contains many different types of information:

- The flow (process) the user follows

- The structure of the information presented in each screen

- The graphical details (colour scheme, copy, etc.)

The Agile way

So how should we design prototypes as part of an iterative development process? There is still the need to capture the conceptual integrity; the team still need prototypes to verify the value and usability of potential solutions. The answer lies in the fidelity of the prototypes.

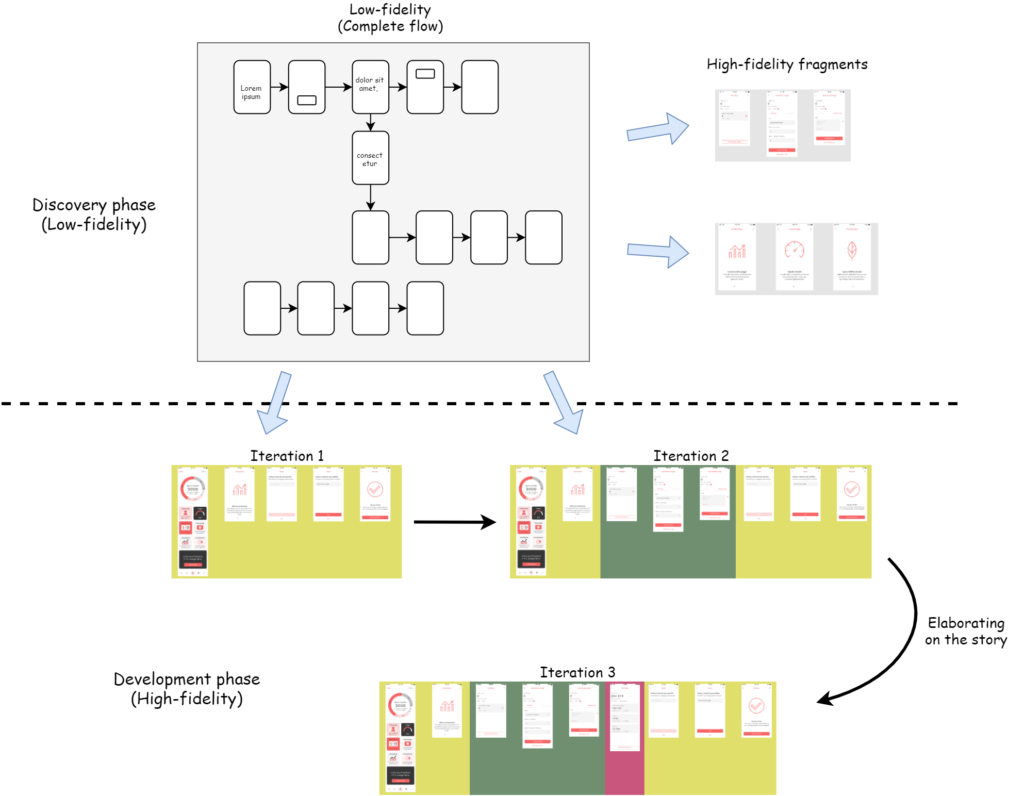

To verify the conceptual integrity of the solution, it is enough to capture the flow, name the activities and identify the states the customer or feature is in. High-fidelity prototypes would be replaced with simple boxes, arrows and labels, and would actually more resemble the process diagrams created using BPMN.

If more fidelity is needed at this stage, in order to verify usability for instance, then it should be added to those screens where it is needed, rather than the whole prototype. But even if creating a full-scale high-fidelity prototype is justified at this stage, it should still not be delivered as a big-bang to the development team for the reasons stated above.

The story starts here

Once the team have identified a valuable, usable, feasible feature that can be built, the next step is to break down the work into smaller pieces, eventually arriving at INVEST-type stories that can be used to create potentially shippable software at every iteration. These stories should be supported by corresponding prototypes which contain all the structure and graphical details needed by the developers to be able to build the feature. The key here is that the designer creates specific prototypes for each story that the team have defined, rather than just referring to some combination of screens in an existing full-scale high-fidelity prototype.

Now the team will have a story-size high-fidelity prototype that only contains enough information for the story they will work on next. Even if the details change, it will be on a much more manageable scale. In fact, creating this small high-fidelity prototype should not be the end of the collaboration between the designer and the developers. They should continue to work closely together during development and make changes directly to the product rather than updating the prototype (which will be obsolete as soon as the story is finished). This avoids the need for any elaborate maintenance procedures.

The flip-side of this is that the earlier full-scale low-fidelity prototype will also be easier to maintain because it only represents the flows, which also should be quite stable even as discovery continues during the development phase. In other words, it is the structure of information and graphical details that are most volatile and should therefore be modelled as close in time to development as possible (“just-in-time”).

Summary

Using this just-in-time approach, the designers would still do about the same amount of work as before with the difference that the more volatile design elements would be created in collaboration with the team and the most volatile elements would not be captured in Figma at all, but added directly to the product in collaboration with the developers, e.g. using pair-programming.